Google Gemma for Real-Time Lightweight Open LLM Inference

Google Gemma for Real-Time Lightweight Open LLM Inference

Apache NiFi, Google Gemma, LLM, Open, HuggingFace, Generative AI, Gemma 7B-IT

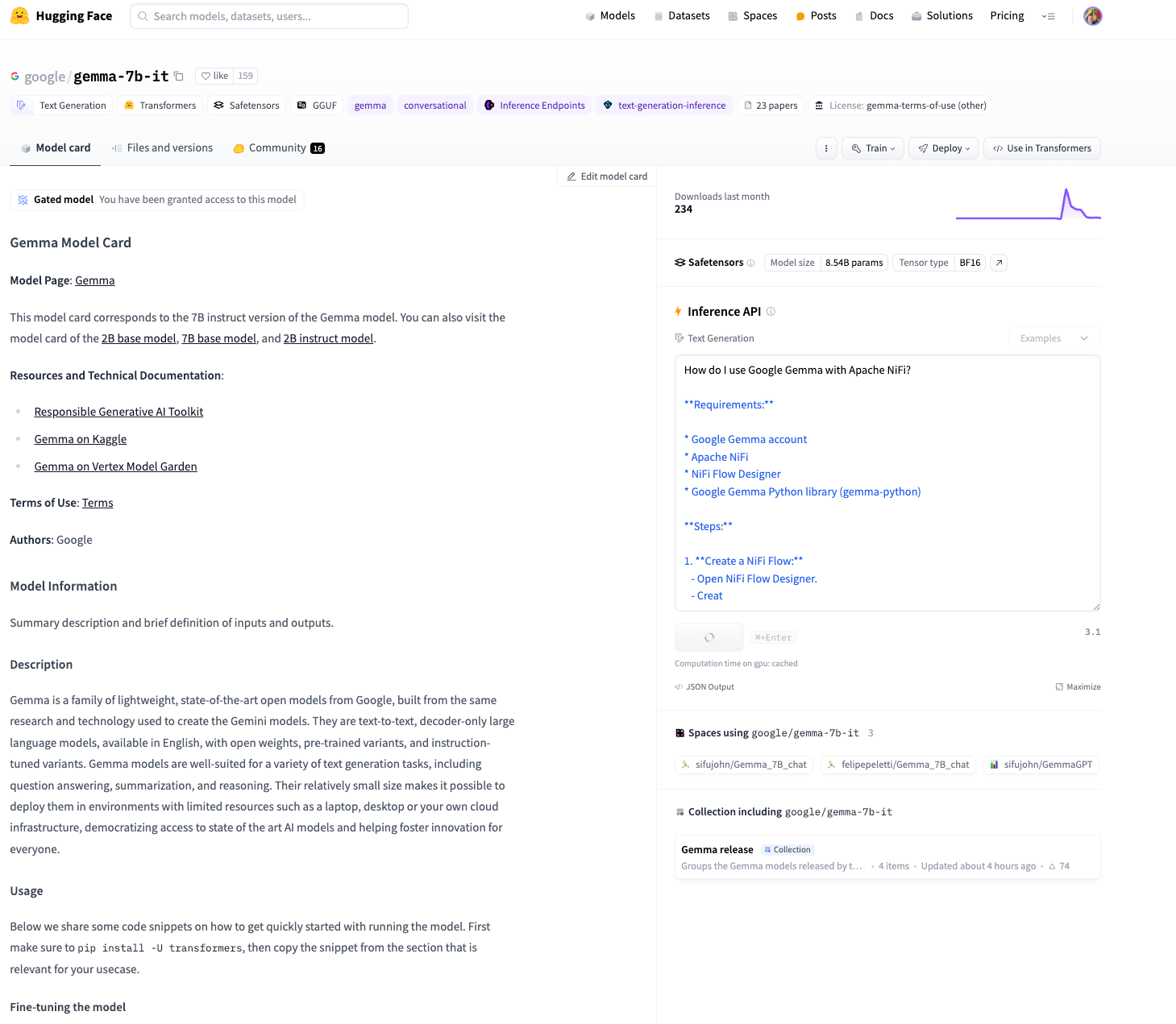

When I saw the new model out on HuggingFace I had to try it with Apache NiFi for some Slack pipelines and compare it to ChatGPT and WatsonX AI.

This seems like a pretty fast interesting new open large language model, I am going to give it a try. Let’s go. As I am short on disk space I am going to call it via HuggingFace REST Inference. There are a lot of ways to use the models including HuggingFace Transformers, Pytorch, Keras-NLP/Keras/Tensorflow and more. We will try both 2B-IT and 7B-IT.

Google Gemma on HuggingFace

This is really easy to start using. We can test on the website before we get ready to roll out a NiFi.

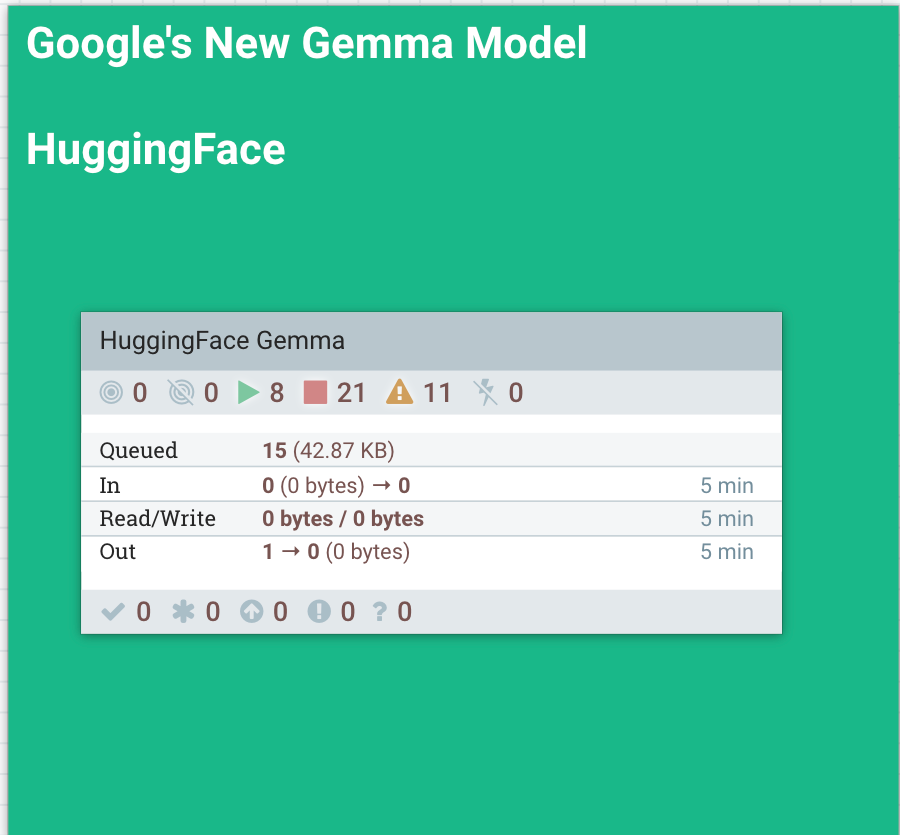

Real-Time DataFlow With Google Gemma 7B-IT

Source Code:

FLaNK for using HuggingFace hosted Open Google Model Gemma - tspannhw/FLaNK-Gemmagithub.com

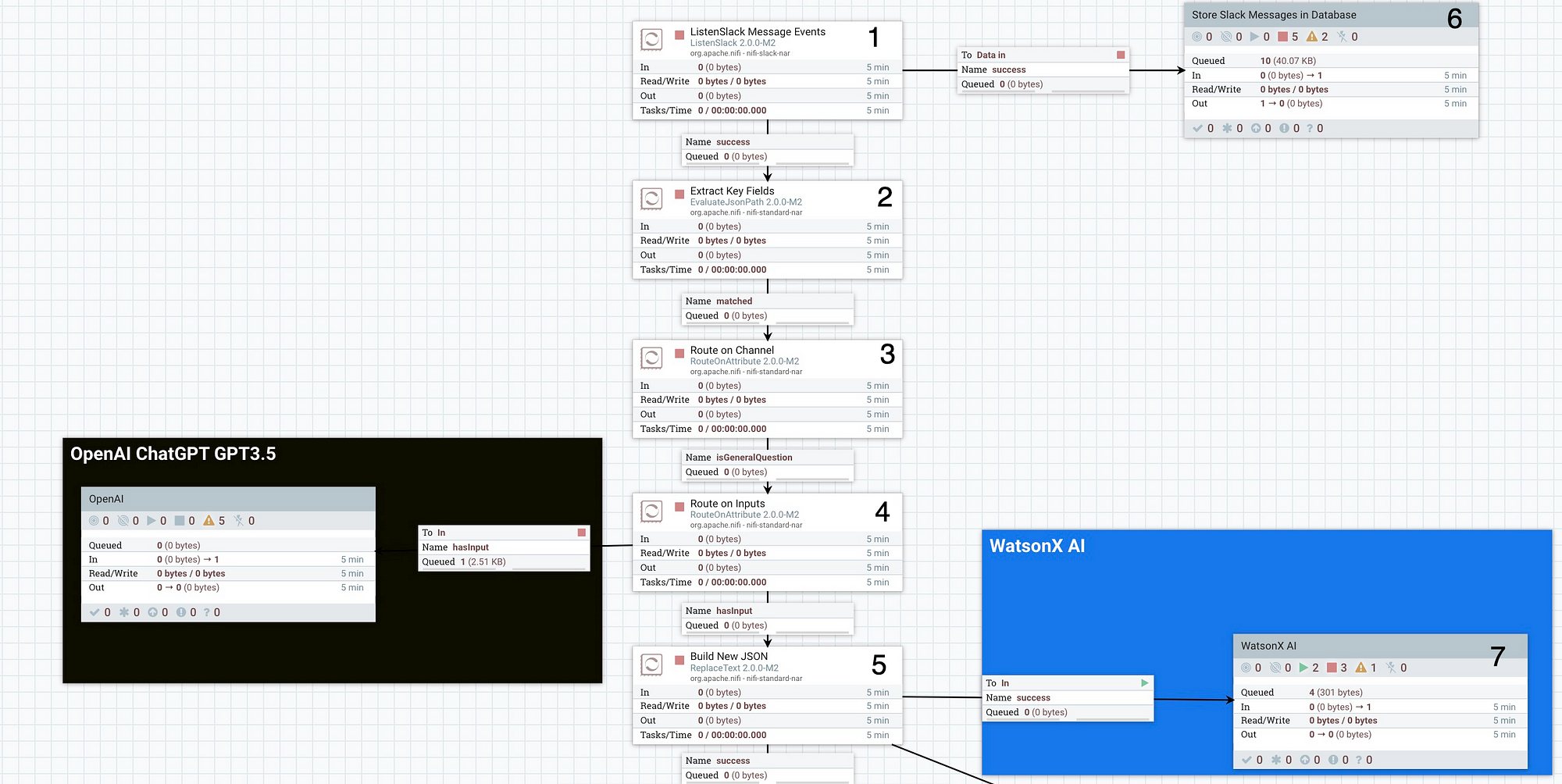

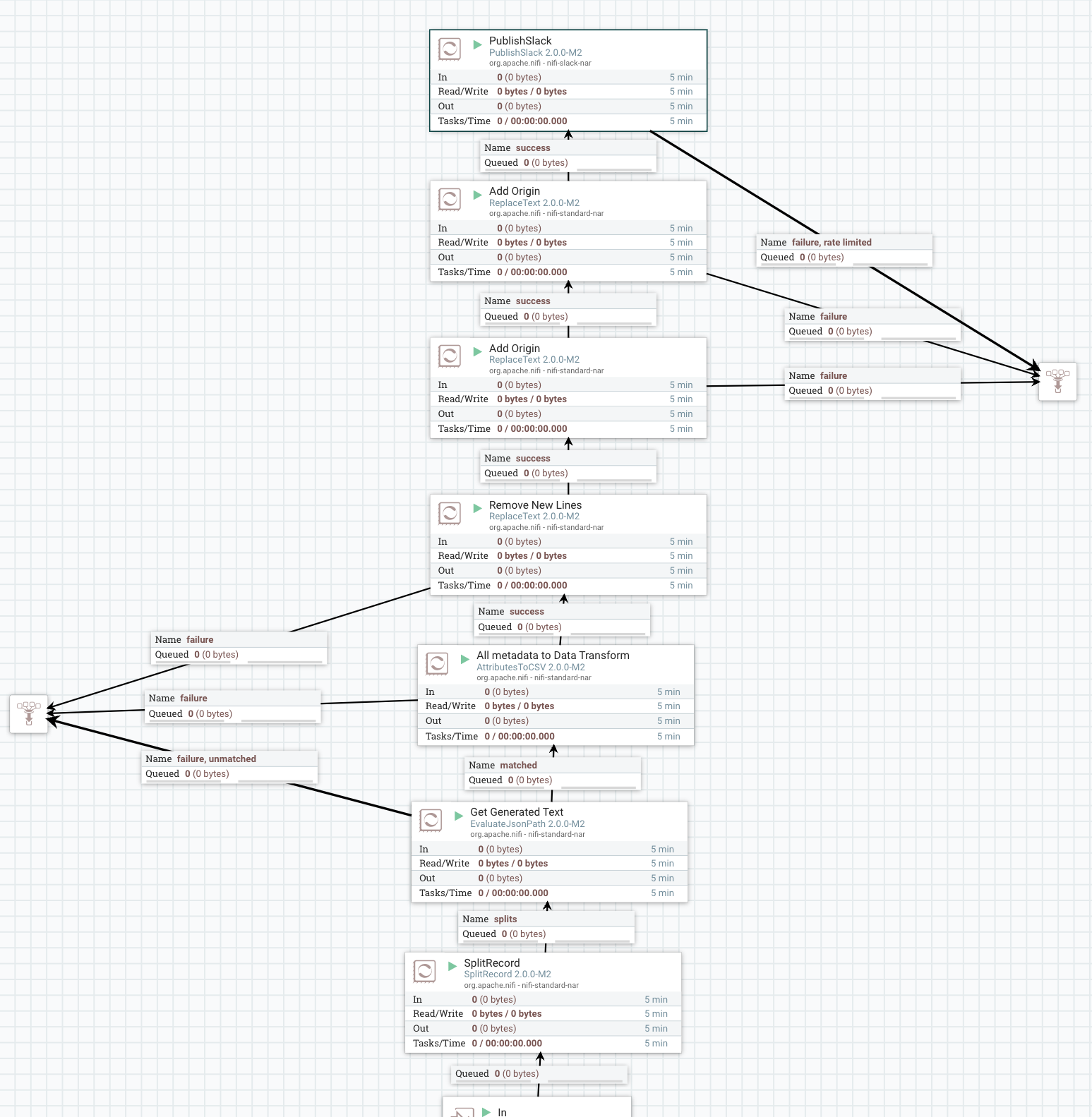

- ListenSlack — We connect via new Slack Sockets and get chat messages

- EvaluateJsonPath — We parse out the fields we like (we send the raw copy somewhere else in 6)

- RouteOnAttribute — We only want messages in the “general” channel

- RouteOnAttribute — We only want real messages

- ReplaceText — We build a new file to send

- ProcessGroup — We will process the raw JSON message from Slack in a sub process group

8. InvokeHTTP — We call HuggingFace against the Google Gemma Model

9. QueryRecord — We clean up the JSON and return 1 row

10. UpdateRecord — We add fields to the JSON file

11. UpdateAttribute — We set headers

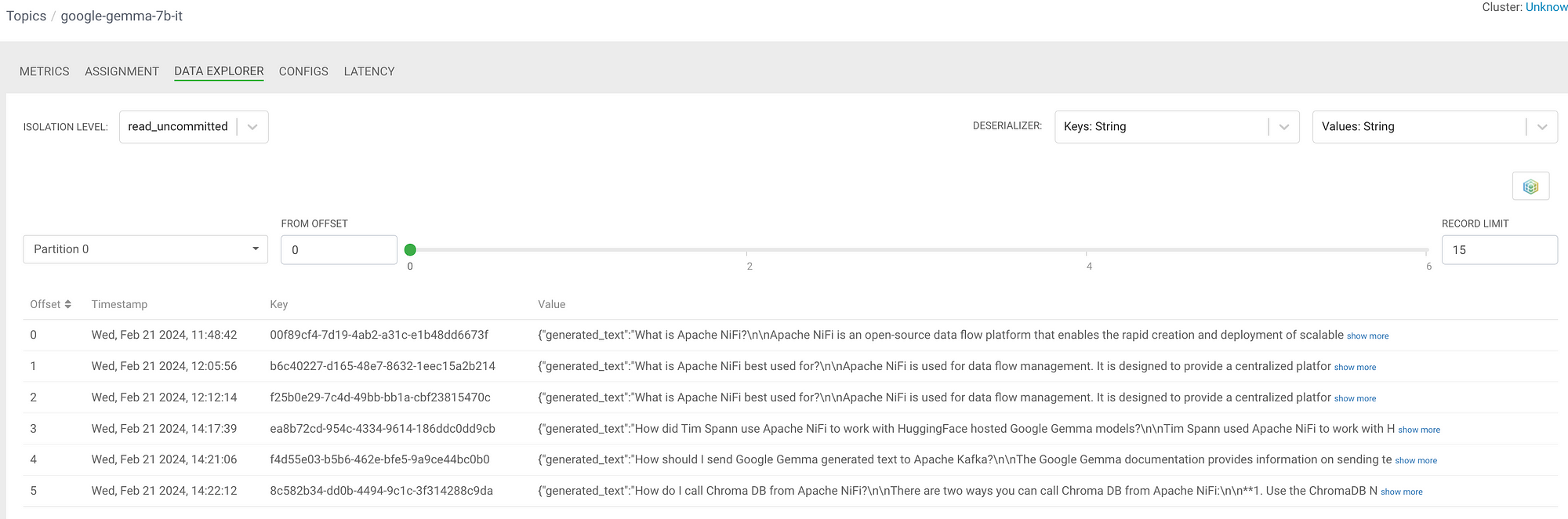

12. PublishKafkaRecord_2.6 — We send the data via Kafka

13. RetryFlowFile — If it failed let’s retry three times then fail

14. ProcessGroup — In this sub process group we will clean up and enrich the Google Gemma results and send to Slack.

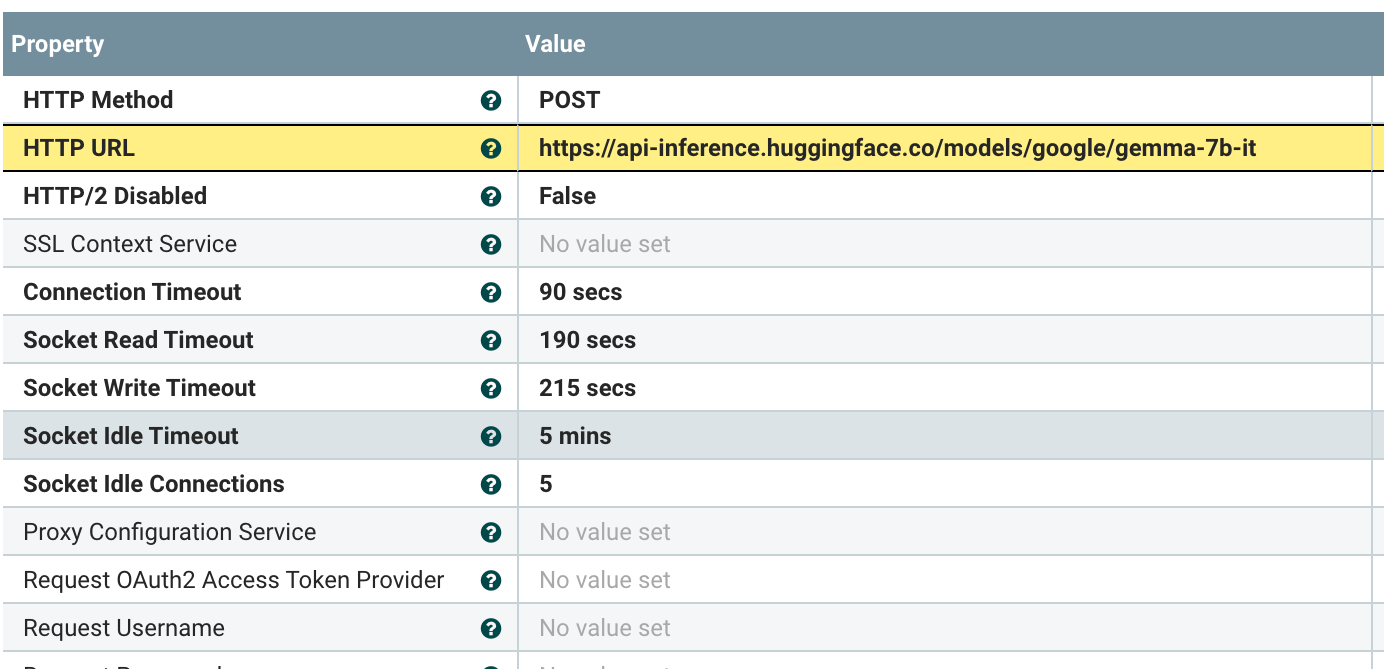

We call HuggingFace for the Google Gemma 7b-IT model.

Merlin, My Cat Manager, asks if I am done with this. It’s been over 3hours to build this.

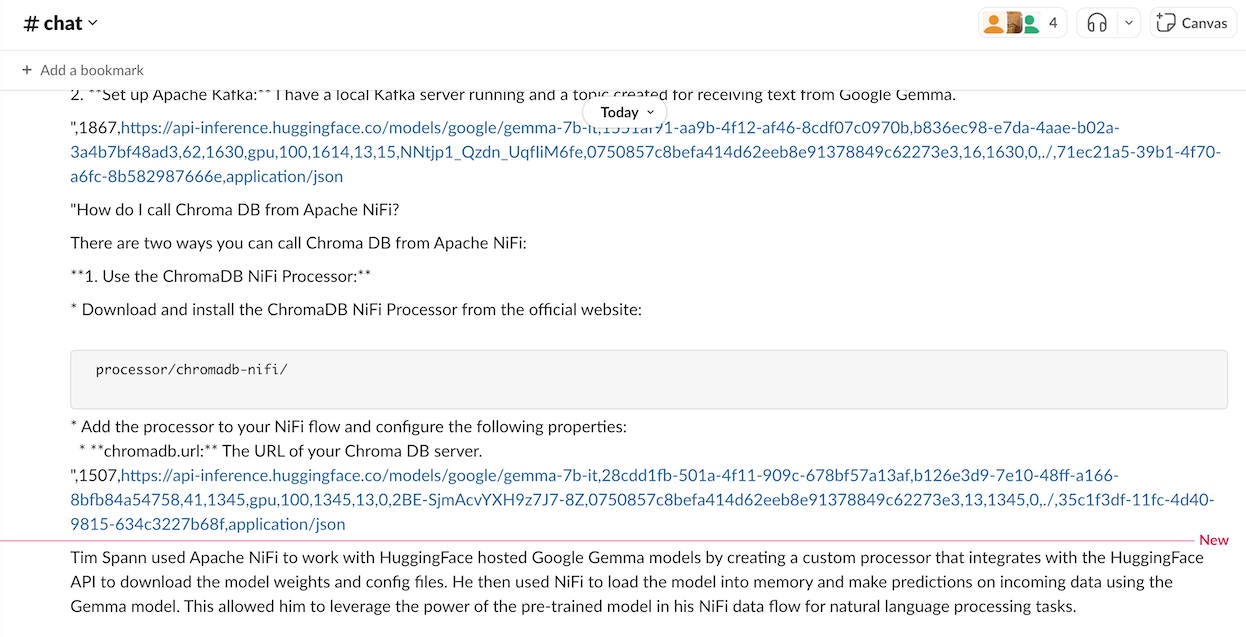

We now parse the results from HuggingFace and send them to our slack channel.

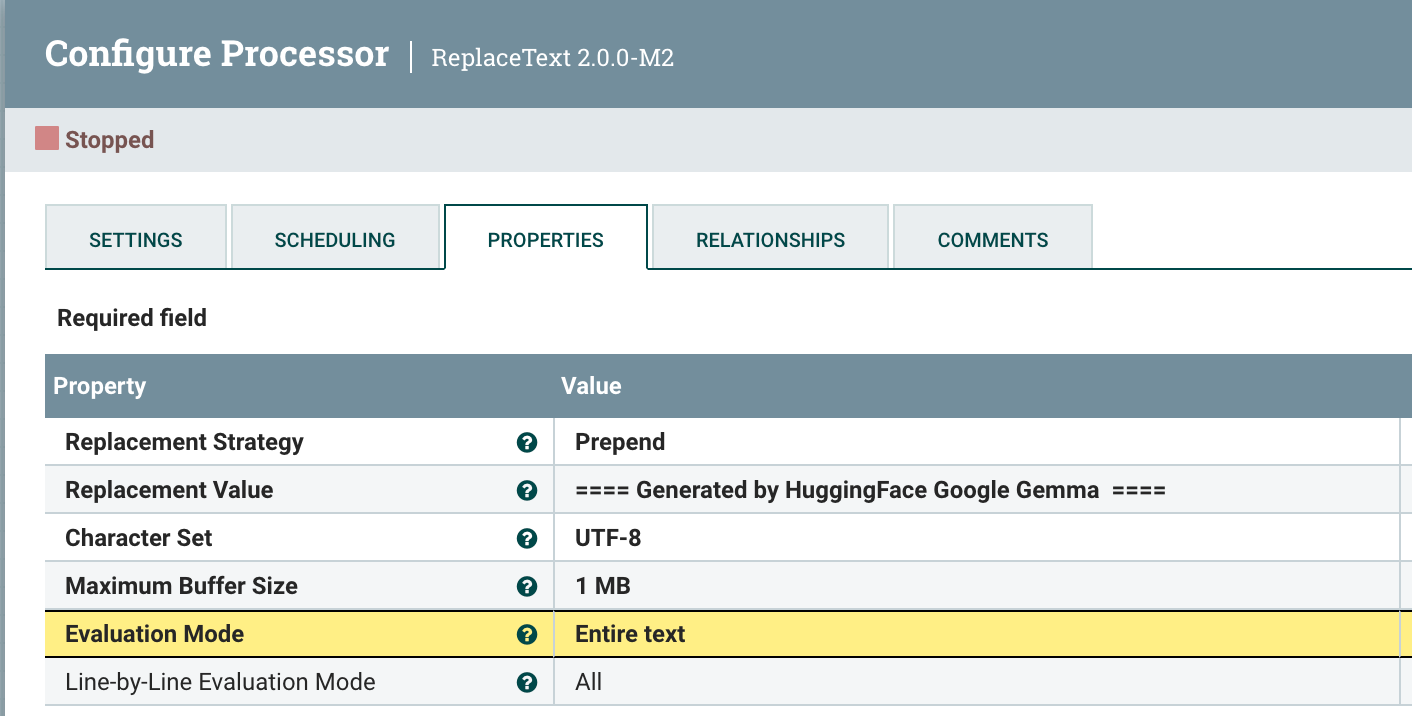

We add a footer to tell us what LLM we used.

That’s it, three different LLM systems and models, plus output to Slack, Postgresql and Kafka. Easy.

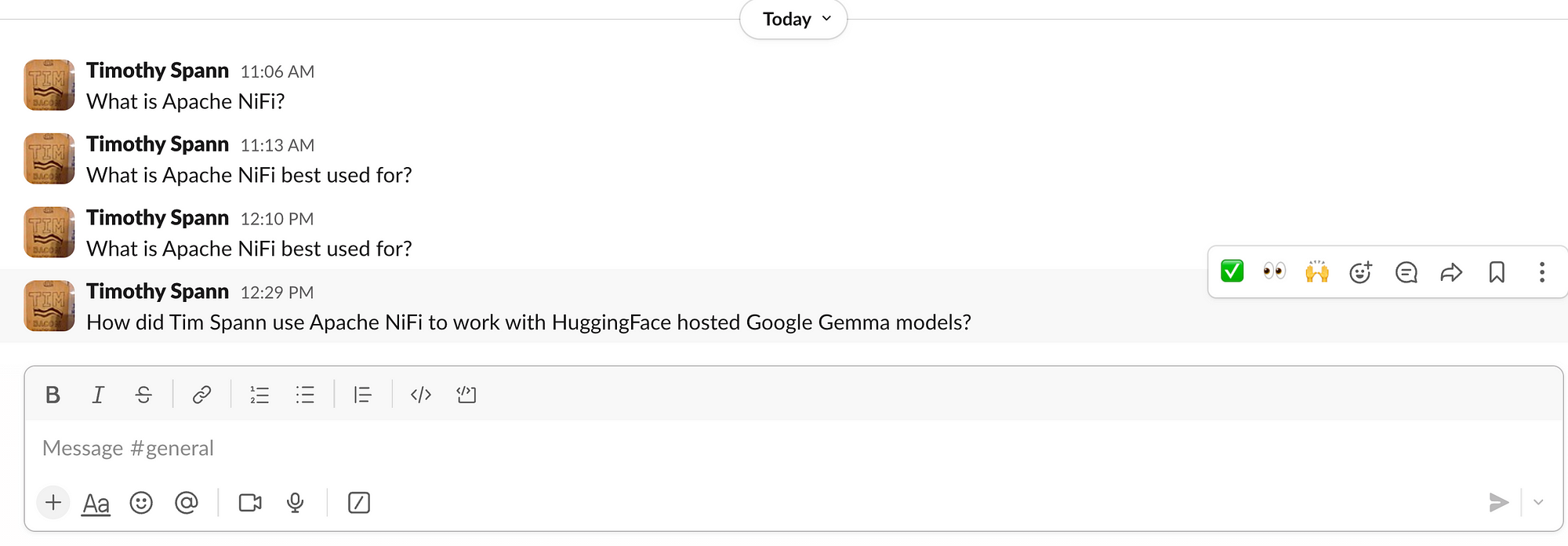

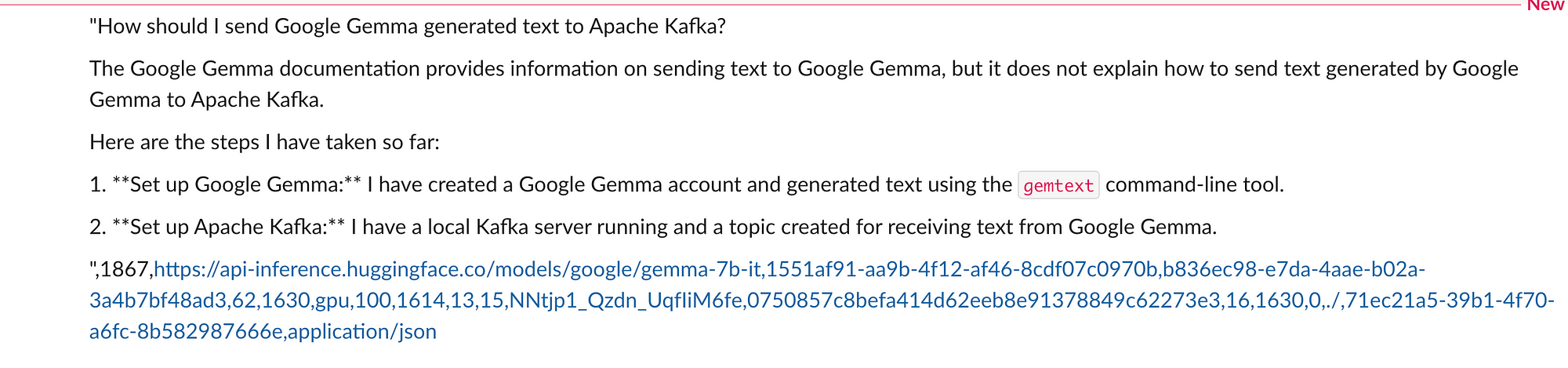

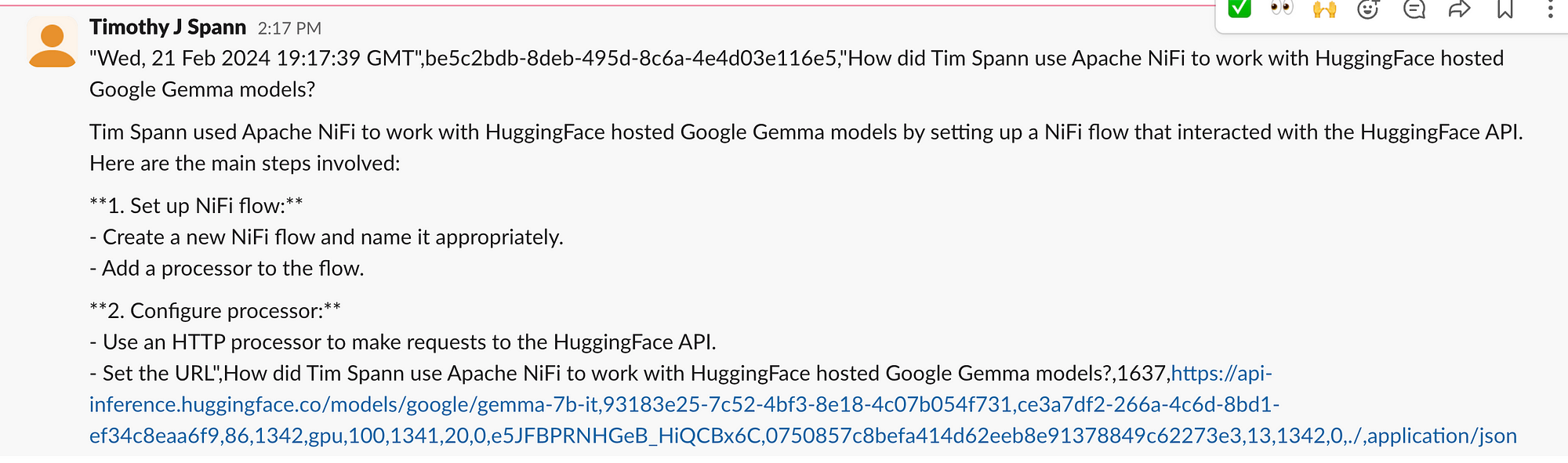

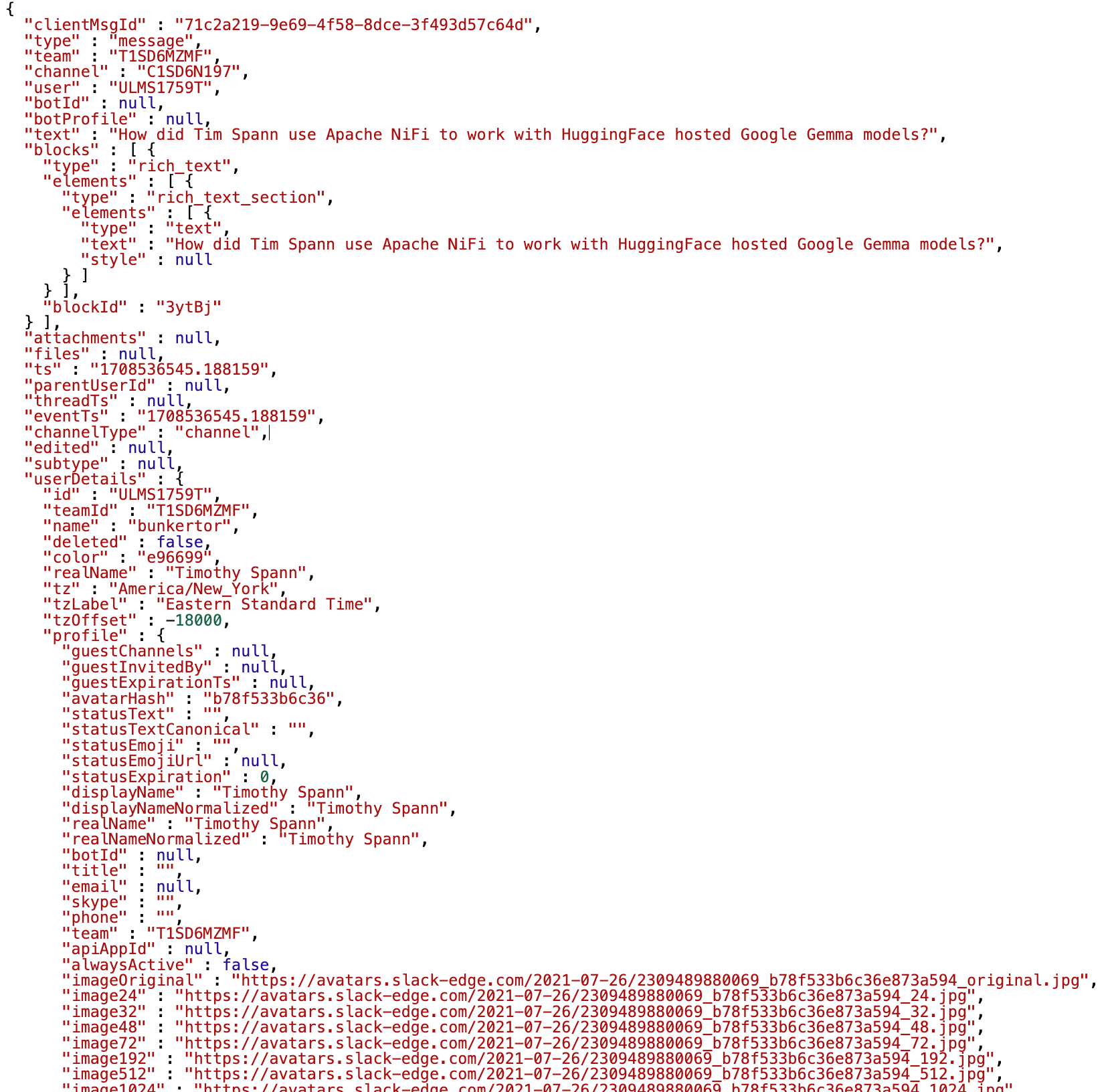

We start off with a Slack message question in general channel to parse.

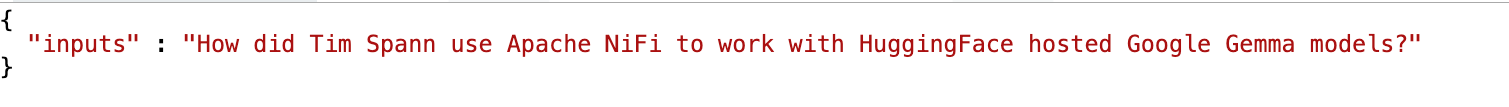

{

"inputs" : "How did Tim Spann use Apache NiFi to work with HuggingFace hosted Google Gemma models?"

}The results of the inference from the Google Gemma model is:

[ {

"generated_text" : "How did Tim Spann use Apache NiFi to work with HuggingFace hosted Google Gemma models?\n\nTim Spann used Apache NiFi to work with HuggingFace hosted Google Gemma models by setting up a NiFi flow that interacted with the HuggingFace API. Here are the main steps involved:\n\n**1. Set up NiFi flow:**\n- Create a new NiFi flow and name it appropriately.\n- Add a processor to the flow.\n\n**2. Configure processor:**\n- Use an HTTP processor to make requests to the HuggingFace API.\n- Set the URL"

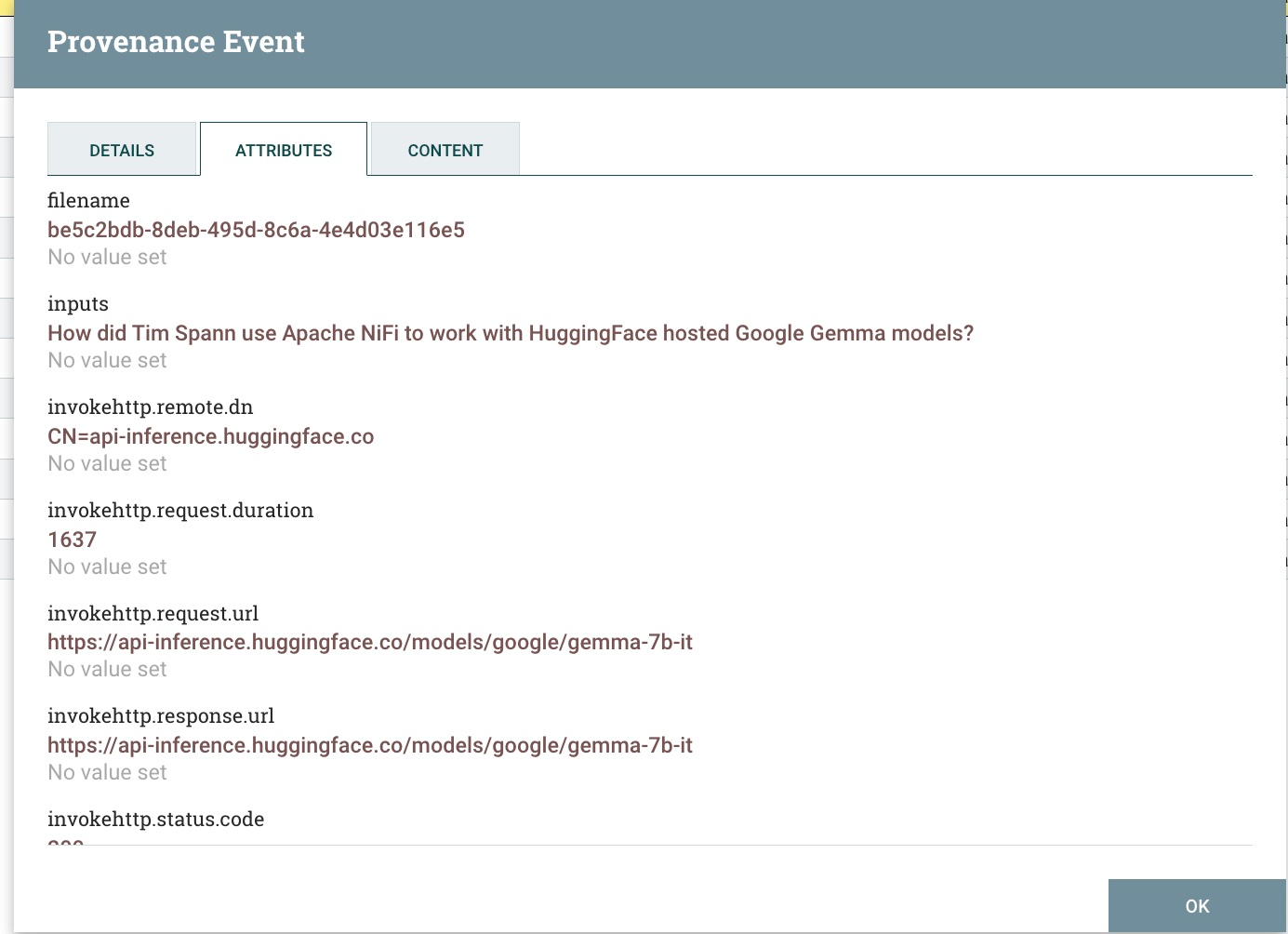

} ]Example of Provenance Events

Input to Slack

HuggingFace REST API Formatted Input to Gemma

Output to Slack

Output to Apache Kafka

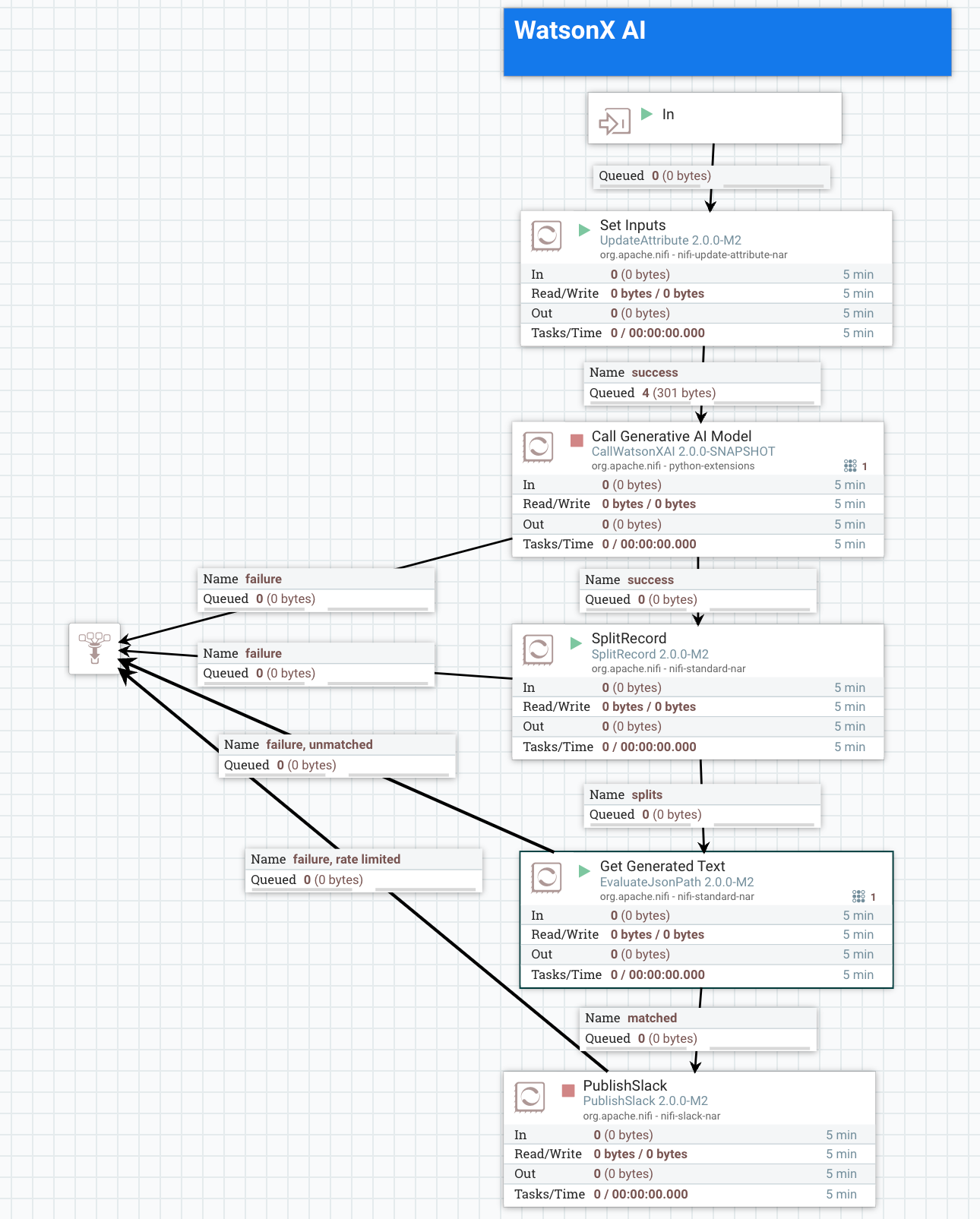

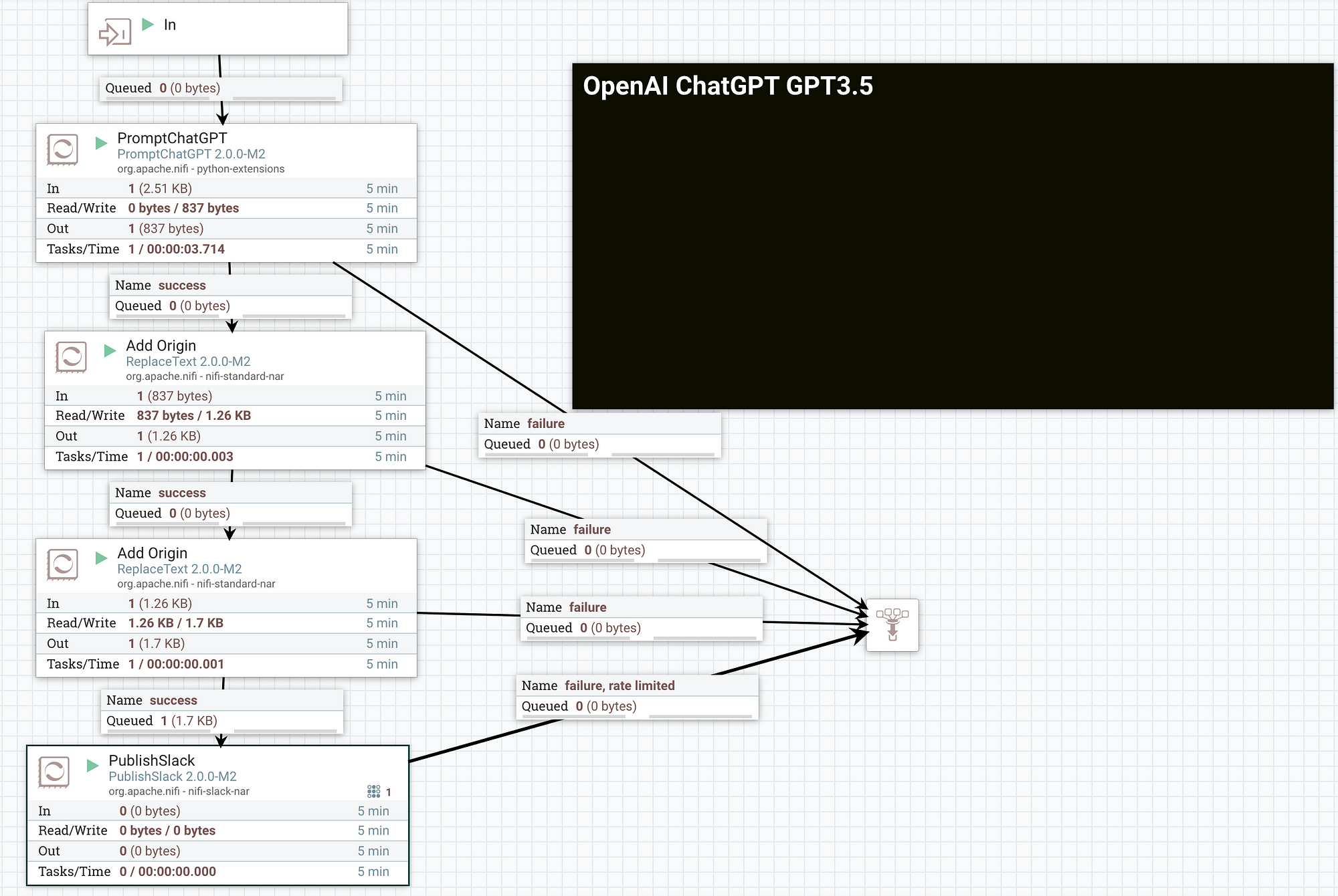

Also Let’s Run Against OpenAI ChatGPT and WatsonX.AI LLAMA 2–70B Chat

The New Slack Processing

Send all Slack JSON Events to Postgresql

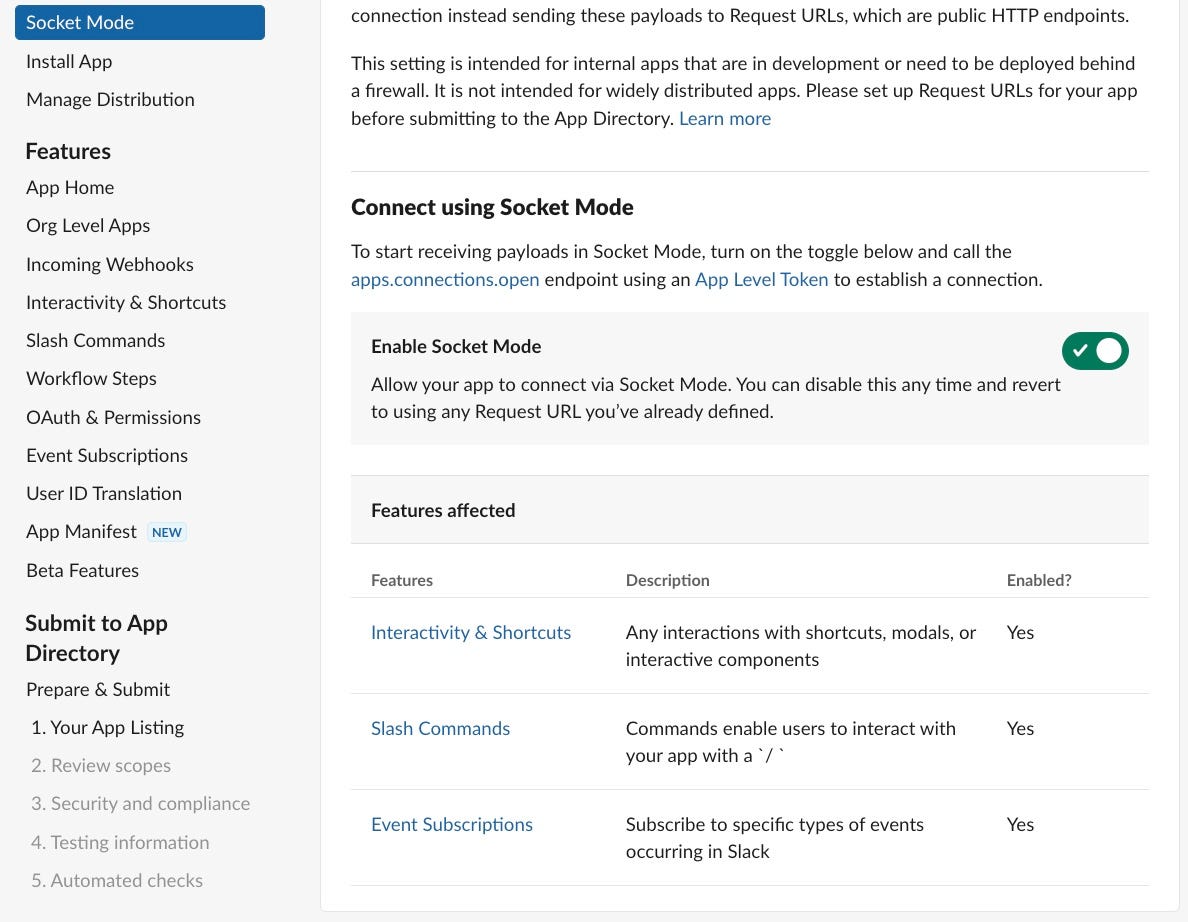

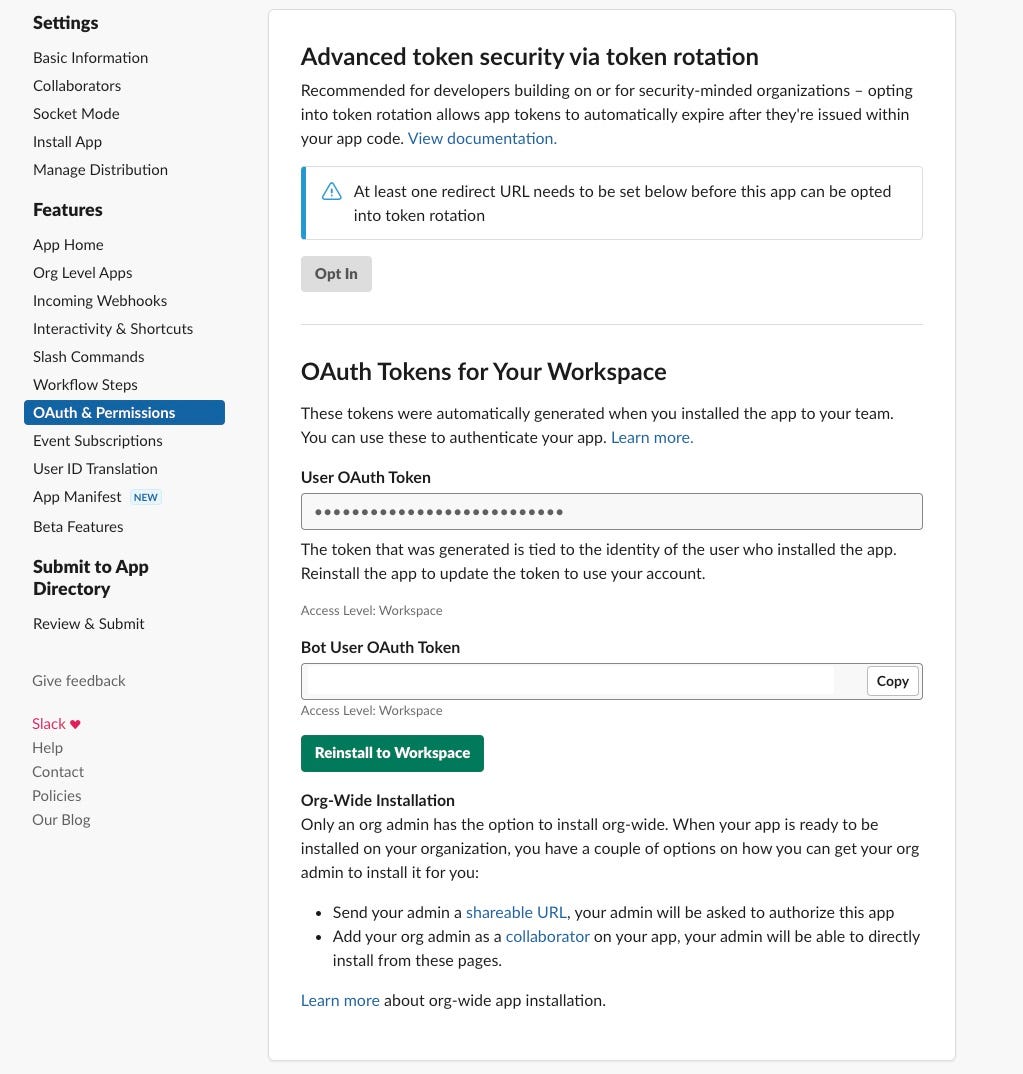

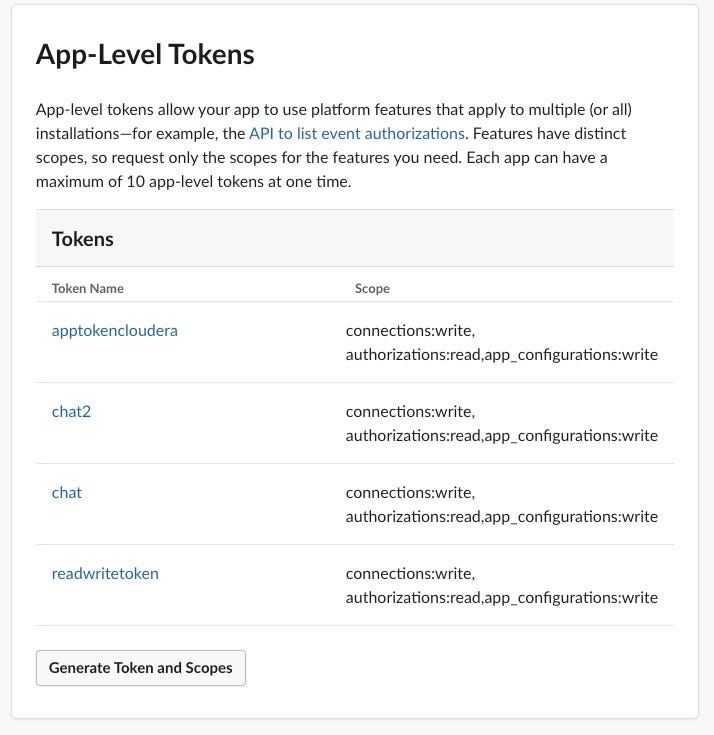

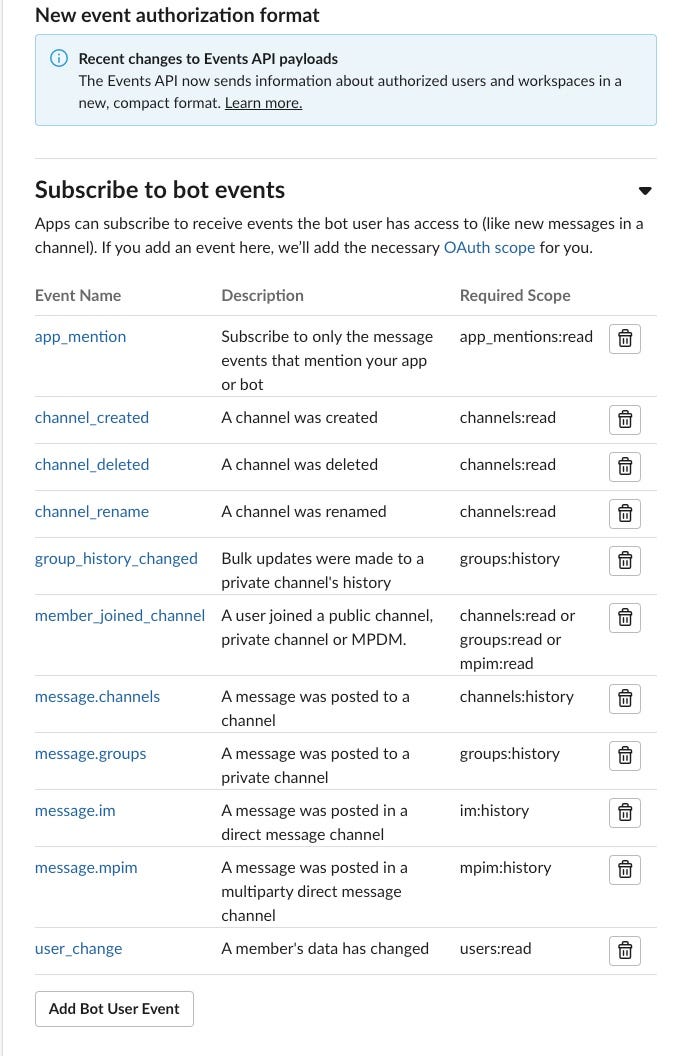

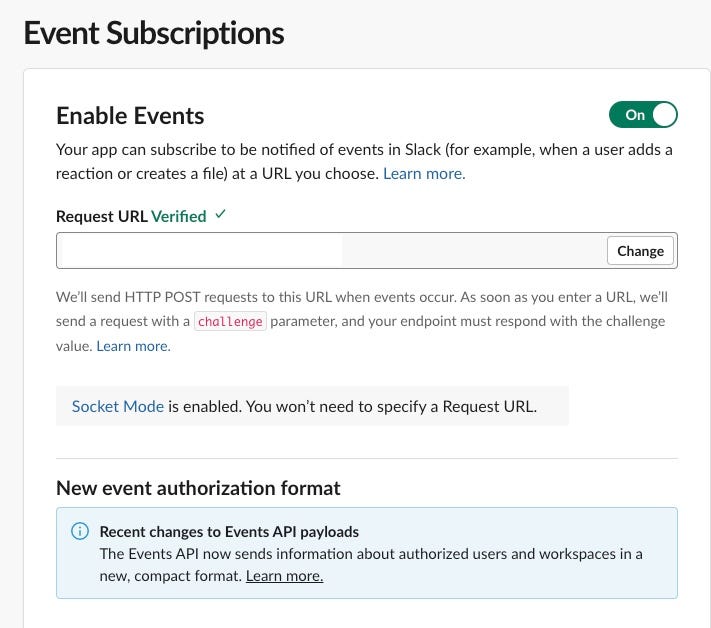

How to Connect NiFi to Slack

Make sure to Enable Socket Mode!

You need the User and Bot User OAuth Tokens.

This is the configuration:

display_information:

name: timchat

description: Apache NiFi Bot For LLM

background_color: "#18254D"

long_description: "chat testing"

features:

app_home:

home_tab_enabled: true

messages_tab_enabled: false

messages_tab_read_only_enabled: false

bot_user:

display_name: nifichat

always_online: true

slash_commands:

- command: /timchat

description: starts command

usage_hint: ask question

should_escape: false

- command: /weather

description: get the weather

usage_hint: /weather 08520

should_escape: false

- command: /stocks

description: stocks

usage_hint: /stocks IBM

should_escape: false

- command: /nifi

description: NiFi Questions

usage_hint: Questions on NiFi

should_escape: false

- command: /flink

description: Flink Commands

usage_hint: Questions on Flink

should_escape: false

- command: /kafka

description: Questions on Kafka

usage_hint: Ask questions about Apache Kafka

should_escape: false

- command: /cml

description: CML

usage_hint: Cloudera Machine Learning

should_escape: false

- command: /cdf

description: Cloudera Data Flow

should_escape: false

- command: /csp

description: Cloudera Stream Processing

should_escape: false

- command: /cde

description: Cloudera Data Engineering

should_escape: false

- command: /cdw

description: Cloudera Data Warehouse

should_escape: false

- command: /cod

description: Cloudera Operational Database

should_escape: false

- command: /sdx

description: Cloudera Shared Data Experience

should_escape: false

- command: /cdp

description: Cloudera Data Platform

should_escape: false

- command: /cdh

description: Cloudera Data Hub

should_escape: false

- command: /rtdm

description: Cloudera Real-Time Data Mart

should_escape: false

- command: /csa

description: Cloudera Streaming Analytics

should_escape: false

- command: /smm

description: Cloudera Streams Messaging Manager

should_escape: false

- command: /ssb

description: Cloudera SQL Streams Builder

should_escape: false

oauth_config:

scopes:

user:

- channels:history

- channels:read

- chat:write

- files:read

- files:write

- groups:history

- im:history

- im:read

- links:read

- mpim:history

- mpim:read

- users:read

- im:write

- mpim:write

bot:

- app_mentions:read

- channels:history

- channels:read

- chat:write

- commands

- files:read

- groups:history

- im:history

- im:read

- incoming-webhook

- links:read

- metadata.message:read

- mpim:history

- mpim:read

- users:read

- im:write

- mpim:write

settings:

event_subscriptions:

request_url:

user_events:

- channel_created

- channel_deleted

- file_created

- file_public

- file_shared

- im_created

- link_shared

- message.channels

- message.groups

- message.im

- message.mpim

bot_events:

- app_mention

- channel_created

- channel_deleted

- channel_rename

- group_history_changed

- member_joined_channel

- message.channels

- message.groups

- message.im

- message.mpim

- user_change

interactivity:

is_enabled: true

org_deploy_enabled: false

socket_mode_enabled: true

token_rotation_enabled: falseRetrieves real-time messages or Slack commands from one or more Slack conversations. The messages are written out in…nifi.apache.org

RESOURCES

We're on a journey to advance and democratize artificial intelligence through open source and open science.huggingface.co

We're on a journey to advance and democratize artificial intelligence through open source and open science.huggingface.co

Introducing Gemma, a family of open-source, lightweight language models. Discover quickstart guides, benchmarks, train…ai.google.dev

Gemma is a family of lightweight, open models built from the research and technology that Google used to create the…www.kaggle.com

Explore and run machine learning code with Kaggle Notebooks | Using data from Gemmawww.kaggle.com

Google's new family of models, Gemma, will be available to developers for language-based tasks. Unlike Gemini, it will…www.theverge.com

lightweight, standalone C++ inference engine for Google's Gemma models. - google/gemma.cppgithub.com